Over the past ten years, a wide variety of both simple and complex algorithms has been developed to assist the microscopist in removing blur from digital images. The most commonly utilized algorithms for deconvolution in optical microscopy can be divided into two classes: deblurring and image restoration. Deblurring algorithms are fundamentally two-dimensional, because they apply an operation plane-by-plane to each two-dimensional plane of a three-dimensional image stack. In contrast, image restoration algorithms are properly termed "three-dimensional" because they operate simultaneously on every pixel in a three-dimensional image stack.

Before continuing, several technical terms must be defined. The object refers to a three-dimensional pattern of light emitted by fluorescent structures in the microscope's field of view. A raw image refers to an unprocessed digital image or image stack acquired from the microscope. Particular regions of interest within the image are referred to as features.

Deblurring Algorithms

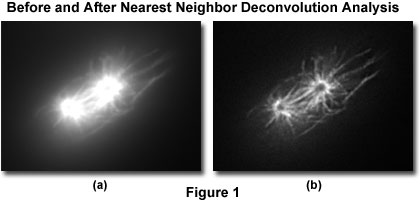

Although common algorithms often referred to as nearest-neighbor, multi-neighbor, no-neighbor, and unsharp masking are fundamentally two-dimensional, they are classified for the purposes of this discussion as deblurring algorithms. As a class, these algorithms apply an operation plane-by-plane to each two-dimensional plane of a three-dimensional image stack. For example, the nearest-neighbor algorithm operates on the plane z by blurring the neighboring planes (z + 1 and z - 1, using a digital blurring filter), then subtracting the blurred planes from the z plane. Multi-neighbor techniques extend this concept to a user-selectable number of planes. A three-dimensional stack is processed by applying the algorithm to every plane in the stack. In this manner, an estimate of the blur is removed from each plane. Figure 1 presents a single focal plane selected from a three-dimensional stack of optical sections, before processing (Figure 1(a)), and after deconvolution by a nearest-neighbor algorithm (Figure 1(b)). The data were acquired from a preparation of Xenopus cells stained for microtubules.

The deblurring algorithms are computationally economical because they involve relatively simple calculations performed on single image planes. However, there are several major disadvantages to these approaches. First, noise from several planes is added together. Second, deblurring algorithms remove blurred signal and thus reduce overall signal levels. Third, features whose point spread functions overlap in particular z planes may be sharpened in planes where they do not really belong (in effect, the apparent position of features may be altered). This problem is particularly severe when deblurring single two-dimensional images because they often contain diffraction rings or light from other structures that will then be sharpened as if they were in that focal plane. Taken together, these findings indicate that deblurring algorithms improve contrast, but they do so at the expense of decreasing the signal-to-noise ratio and may also introduce structural artifacts in the image.

Two-dimensional deblurring algorithms may be useful in situations where a quick deblurring operation is warranted or when computer power is limited. These routines work best on specimens that have fluorescent structures distributed discretely, especially in the z-axis. However, simple deblurring algorithms induce artifactual changes in the relative intensities of pixels and should be applied with caution (or preferably, not applied at all) to images destined for morphometric measurements, quantitative fluorescence intensity measurements, and intensity ratio calculations.

Image Restoration Algorithms

The primary function of image restoration algorithms in deconvolution microscopy is to deal with blur as a three-dimensional problem. Instead of subtracting blur, they attempt to reassign blurred light to the proper in-focus location. This is performed by reversing the convolution operation inherent in the imaging system. If the imaging system is modeled as a convolution of the object with the point spread function, then a deconvolution of the raw image should restore the object. However, the object cannot be restored perfectly because of the fundamental limitations inherent in the imaging system and the image-formation model. The best that can be done is to estimate the object given these limitations. Restoration algorithms estimate the object, following the logic that a good estimate of the object is one that, when convolved with the point spread function, yields the raw image.

An advantage of this formulation is that convolution operations on large matrices (such as a three-dimensional image stack) can be computed very simply using the mathematical technique of Fourier transformation. If the image and point spread function are transformed into Fourier space, the convolution of the image by the point spread function can be computed simply by multiplying their Fourier transforms. The resulting Fourier image can then be back-transformed into real three-dimensional coordinates.

Inverse Filter Algorithms

The first image deconvolution algorithms to be developed were termed inverse filters. Such filters, along with their cousins the regularized inverse filters, have been employed in electronic signal processing since the 1960s and were first applied to images in the late 1970s. In most image-processing software programs, these algorithms go by a variety of names including Wiener deconvolution, Regularized Least Squares, Linear Least Squares, and Tikhonov-Miller regularization.

An inverse filter functions by taking the Fourier transform of an image and dividing it by the Fourier transform of the point spread function. Because division in Fourier space is the equivalent of deconvolution in real space, this is the simplest method to reverse the convolution that produced the blurry image. The calculation is rapid, about as fast as the two-dimensional deblurring methods discussed above. However, the utility of this method is limited by noise amplification. During division in Fourier space, small noise variations in the Fourier transform are amplified by the division operation. The result is that blur removal is compromised as a tradeoff against a gain in noise. In addition, an artifact known as ringing can be introduced.

Noise amplification and ringing can be reduced by making some assumptions about the structure of the object that gave rise to the image. For instance, if the object is assumed to be relatively smooth, noisy solutions with rough edges can be eliminated. This approach is termed regularization. A regularized inverse filter can be described as a statistical estimator that applies a certain kind of constraint on possible estimates, given some assumption about the object: in this case, smoothness. A constraint on smoothness enables the algorithm to select a reasonable estimate out of the large number of possible estimates that can arise because of noise variability.

Regularization can be applied in one step within an inverse filter, or it can be applied iteratively. The result is usually smoothed (stripped of higher Fourier frequencies). Much of the "roughness" being removed in the image occurs at Fourier frequencies well beyond the resolution limit and, therefore, the process does not eliminate structures recorded by the microscope. However, because there is a potential for loss of detail, software implementations of inverse filters typically include an adjustable parameter that enables the user to control the tradeoff between smoothing and noise amplification.

Constrained Iterative Algorithms

In order to improve the performance of inverse filters, a variety of additional three-dimensional algorithms can be applied to the task of image restoration. These methods are collectively termed constrained iterative algorithms, and operate in successive cycles (thus, the term "iterative"). In addition, these algorithms also usually apply constraints on possible solutions, which not only help to minimize noise and other distortion, but also increase the power to restore blurred signal.

A typical constrained iterative algorithm operates as follows. First, an initial estimate of the object is performed, which is usually the raw image itself. The estimate is then convolved with the point spread function, and the resulting "blurred estimate" is compared with the original raw image. This comparison is employed to compute an error criterion that represents how similar the blurred estimate is to the raw image. Often referred to as a figure of merit, the error criterion is then utilized to alter the estimate in such a way that the error is reduced. A new iteration then takes place: the new estimate is convolved with the point spread function, a new error criterion is computed, and so on. The best estimate will be the one that minimizes the error criterion. As the algorithm progresses, each time the error criterion is determined not to have been minimized, the new estimate is blurred again, and the error criterion recomputed. The entire process is repeated until the error criterion is minimized or reaches a defined threshold. The final restored image is the object estimate at the last iteration.

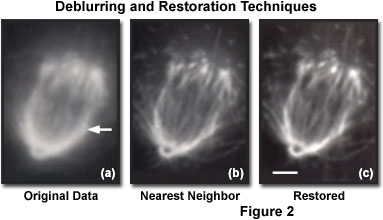

The data presented in Figure 2 are taken from a three-dimensional image stack containing 70 optical sections, and recorded at intervals of 0.2 micrometers through a single XLK2 cell. A widefield imaging system, equipped with a high numerical aperture (1.40) oil immersion objective, was employed to acquire the images. The left-hand image (Figure 2(a), labeled Original Data) is a single focal plane taken from the three-dimensional stack, before the application of any data processing. Deblurring by a nearest-neighbor algorithm produced the result shown in the image labeled Nearest Neighbor (Figure 2(b)). The third image (Figure 2(c), Restored) illustrates the result of restoration by a commercially marketed constrained iterative deconvolution software product. Both deblurring and restoration improve contrast, but the signal-to-noise ratio is significantly lower in the deblurred image than in the restored image. The scale bar in Figure 2(c) represents a length of 2 micrometers, and the arrow (Figure 2(a))designates the position of the line plot presented in Figure 4.

A majority of the algorithms currently applied to deconvolution of optical images from the microscope incorporate constraints on the range of allowable estimates. A commonly employed constraint is smoothing or regularization, as discussed above. As iterations proceed, the algorithm will tend to amplify noise, so most implementations suppress this with a smoothing or regularization filter.

Another common constraint is nonnegativity, which means that any pixel value in the estimate that becomes negative during the course of an iteration is automatically set to zero. Pixel values can often become negative as the result of a Fourier transformation or subtraction operation in the algorithm. The nonnegativity constraint is realistic because an object cannot have negative fluorescence. It is essentially a constraint on possible estimates, given our knowledge of the object's structure. Other types of constraints include boundary constraints on pixel saturation, constraints on noise statistics, and other statistical constraints.

Classical Algorithms for Constrained Iterative Deconvolution

The first applications of constrained iterative deconvolution algorithms to images captured in the microscope were based on the Jansson-Van Cittert (JVC) algorithm, a procedure first developed for application in spectroscopy. Agard later modified this algorithm for analysis of digital microscope images in a landmark series of investigations. Commercial firms such as Vaytek, Intelligent Imaging Innovations, Applied Precision, Carl Zeiss, and Bitplane currently market various implementations of Agard's modified algorithm. In addition, several research groups have developed a regularized least squares minimization method that has been marketed by Vaytek and Scanalytics. These algorithms utilize an additive or multiplicative error criterion to update the estimate at each iteration.

Statistical Iterative Algorithms

Another family of iterative algorithms uses probabilistic error criteria borrowed from statistical theory. Likelihood, a reverse variation of probability, is employed in the maximum likelihood estimation (MLE) and expectation maximization (EM) commercially available algorithms implemented by SVI, Bitplane, ImproVision, Carl Zeiss, and Autoquant. Maximum likelihood estimation is a popular statistical tool with applications in many branches of science. A related statistical measure, maximum entropy (ME - not to be confused with expectation maximization, EM) has been implemented in image deconvolution by Carl Zeiss.

Statistical algorithms are more computationally intensive than the classical methods and can take significantly longer to reach a solution. However, they may restore images to a slightly higher degree of resolution than the classical algorithms. These algorithms also have the advantage that they impose constraints on the expected noise statistic (in effect, a Poisson or a Gaussian distribution). As a result, statistical algorithms have a more subtle noise policy than simply regularization, and they may produce better results on noisy images. However, the choice of an appropriate noise statistic may depend on the imaging condition, and some commercial software packages are more flexible than others in this regard.

Blind Deconvolution Algorithms

Blind deconvolution is a relatively new technique that greatly simplifies the application of deconvolution for the non-specialist, but the method is not yet widely available in the commercial arena. The algorithm was developed by altering the maximum likelihood estimation procedure so that not only the object, but also the point spread function is estimated. Using this approach, an initial estimate of the object is made and the estimate is then convolved with a theoretical point spread function calculated from optical parameters of the imaging system. The resulting blurred estimate is compared with the raw image, a correction is computed, and this correction is employed to generate a new estimate, as described above. This same correction is also applied to the point spread function, generating a new point spread function estimate. In further iterations, the point spread function estimate and the object estimate are updated together.

Blind deconvolution works quite well, not only on high-quality images, but also on noisy images or those suffering from spherical aberration. The algorithm begins with a theoretical point spread function, but adapts it to the specific data being deconvolved. In this regard, it spares the user from the difficult process of experimentally acquiring a high-quality empirical point spread function. In addition, because the algorithm adjusts the point spread function to the data, it can partially correct for spherical aberration. However, this computational correction should be a last resort, because it is far more desirable to minimize spherical aberration during image acquisition.

The results of applying three different processing algorithms to the same data set are presented in Figure 3. The original three-dimensional data are 192 optical sections of a fruit fly embryo leg acquired in 0.4-micrometer z-axis steps with a widefield fluorescence microscope (1.25 NA oil objective). The images represent a single optical section selected from the three-dimensional stack. The original (raw) image is illustrated in Figure 3(a). The results of deblurring by a nearest neighbor algorithm appear in Figure 3(b), with processing parameters set for 95 percent haze removal. The same image slice is illustrated after deconvolution by an inverse (Wiener) filter (Figure 3(c)), and by iterative blind deconvolution (Figure 3(d)), incorporating an adaptive point spread function method.

Deconvolution of Confocal and Multiphoton Images

As might be expected, it is also possible to restore images acquired with a confocal or multiphoton optical microscope. The combination of confocal microscopy and deconvolution techniques improves resolution beyond what is generally attainable with either technique alone. However, the major benefit of deconvolving a confocal image is not so much the reassignment as the averaging of out-of-focus light, which results in decreased noise. Deconvolution of multiphoton images has also been successfully utilized to remove image artifacts and improve contrast. In all of these cases, care must be taken to apply the appropriate point spread function, especially if the confocal pinhole aperture is adjustable.

Implementation of Deconvolution Algorithms

Processing speed and quality are dramatically affected by how a given deconvolution algorithm is implemented by the software. The algorithm can be exercised in ways that reduce the number of iterations and accelerate convergence to produce a stable estimate. For example, the unoptimized Jansson-Van Cittert algorithm usually requires between 50 and 100 iterations to converge to an optimal estimate. By prefiltering the raw image to suppress noise and correcting with an additional error criterion on the first two iterations, the algorithm converges in only 5 to 10 iterations. In addition, a smoothing filter is usually introduced every five iterations to curtail noise amplification.

When using an empirical point spread function, it is critical to use a high-quality point spread function with minimal noise. No deconvolution package currently in the market uses the "raw" point spread function recorded directly from the microscope. Instead, the packages contain preprocessing routines that reduce noise and enforce radial symmetry by averaging the Fourier transform of the point spread function. Many software packages also enforce axial symmetry in the point spread function and thus assume the absence of spherical aberration. These steps reduce noise and aberrations, and make a large difference in the quality of restoration.

Another important aspect of deconvolution algorithm implementation is preprocessing of the raw image, via routines such as background subtraction, flatfield correction, bleaching correction, and lamp jitter correction. These operations can improve the signal-to-noise ratio and remove certain kinds of artifacts. Most commercially available software packages include such operations, and the user manual should be consulted for a detailed explanation of specific aspects of their implementation.

Other deconvolution algorithm implementation issues concern data representation. Images can be divided into subvolumes or represented as entire data blocks. Individual pixel values can be represented as integers or as floating-point numbers. Fourier transforms can be represented as floating-point numbers or as complex numbers. In general, the more faithful the data representation, the more computer memory and processor time required to deconvolve an image. Thus, there is a tradeoff between the speed of computation and the quality of restoration.

Conclusions

Iterative restoration algorithms differ from both deblurring algorithms and confocal microscopy in that they do not remove out-of-focus blur but instead attempt to reassign it to the correct image plane. In this manner, out-of-focus signal is utilized rather than being discarded. After restoration, pixel intensities within fluorescent structures increase, but the total summed intensity of each image stack remains the same, as intensities in formerly blurred areas diminish. Blur occurring in surrounding details of the object is moved back into focus, resulting in sharper definition of the object and better differentiation from the background. Better contrast and a higher signal-to-noise ratio are also usually achieved at the same time.

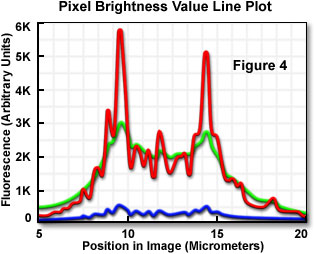

These properties are illustrated in Figure 2, where it is demonstrated that restoration improves image contrast and subsequently enables better resolution of objects, without the introduction of noise that occurs in deblurring methods. Perhaps more importantly for image analysis and quantitation, the sum of the fluorescence signal in the raw image is identical to that in the deconvolved image. When properly implemented, image restoration methods preserve total signal intensity but improve contrast by adjustment of signal position (Figure 4). Therefore, quantitative analysis of restored images is possible and, because of the improved contrast, often desirable.

The graphical plot presented in Figure 4 represents the pixel brightness values along a horizontal line traversing the cell illustrated in Figure 2 (the line position is shown by the arrow in Figure 2(a)). The original data are represented by the green line, the deblurred image data by the blue line, and the restored image data by the red line. As is apparent in the data, deblurring causes a significant loss of pixel intensity over the entire image, whereas restoration results in a gain of intensity in areas of specimen detail. A similar loss of image intensity as that seen with the deblurring method occurs with the application of any two-dimensional filter.

When used in conjunction with widefield microscopy, iterative restoration techniques are light efficient. This aspect is most valuable in light-limited applications such as high-resolution fluorescence imaging, where objects are typically small and contain few fluorophores, or in live-cell fluorescence imaging, where exposure times are limited by the extreme sensitivity of live cells to phototoxicity.