利用基于深度学习的TruAI进行准确、高效的显微镜图像分析

简介

实验通常需要来自显微镜图像的数据。对于精确的图像分析而言,分割对于从图像中提取分析目标区域非常重要。常见的分割方法是对图像强度值或颜色设置阈值。

这一方法尽管有效,但可能非常耗时,并且会影响样品条件。新一代图像分析方法(例如我们的cellSens成像软件和基于深度学习的TruAI)有助于降低样品损坏风险,并且同时能够实现高效率和准确性。

使用TruAI的应用示例

1)无标记细胞核检测和分割

若要计算细胞数量,定位细胞和组织中的细胞核并评估细胞面积,研究人员通常利用细胞核的荧光染色并根据荧光强度信息进行分割。

与此相比,TruAI还可以使用明场图像进行细胞核分割。其可以利用明场和荧光图像的细胞核分割结果训练神经网络进行工作。

一旦创建神经网络之后,这种自我学习式的显微观察方法就无需对细胞核进行荧光染色了。其他优点包括:

- 减少细胞核标记所花费的时间

- 排除因标记对细胞造成的影响

- 防止光毒性和褪色

- 可通过添加另一通道获得更多的样品信息

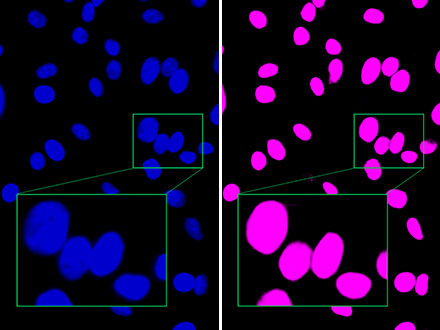

TruAI的无标记细胞核检测 图1 |

图2 |

图1:虽然明场图像(左)因细胞未染色具有极低的对比度,但TruAI仍可进行高精度的细胞核识别(右)。

图2:与荧光图像(左)相比,奥林巴斯的TruAI可清晰区分紧密贴近的细胞核(右),表明其能够进行高精度的识别。

2) 超低曝光量荧光标记细胞的定量分析

荧光标记是现代显微镜细胞研究中的重要工具。但是,激发光的高曝光量会导致光损伤或光毒性,并且对细胞活力具有可观察的影响。即使未观察到直接影响,强光照射也会影响细胞的自然行为,从而导致不良影响。

在长期活细胞实验中,理想情况是在荧光观察过程中将曝光量降至最低。从技术角度来看,超低曝光量意味着需要分析信号水平非常低并且由此造成的信噪比也很低的图像。我们的TruAI可以让您在分析低信号图像中兼顾鲁棒性和精确度。

图3 |

图4 |

图5 |

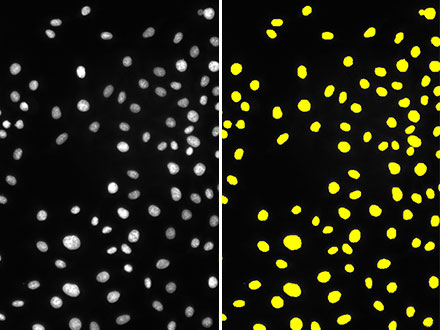

图3:使用设置亮度阈值的常规方法在具有足够亮度的荧光图像(左)中进行细胞核识别(右)的结果。

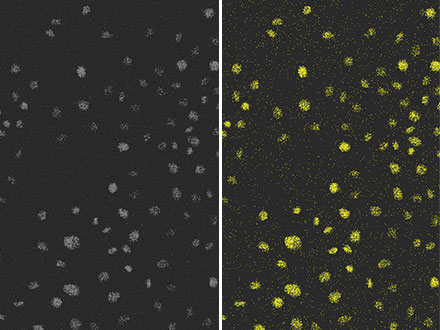

图4:使用与图3相同的常规方法在弱激发光所致极差信噪比荧光图像(左)中进行细胞核识别(右)的结果。您可以发现识别准确性较低。

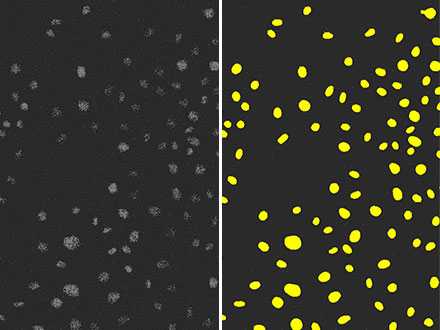

图5:使用TruAI在弱激发光所致极差信噪比荧光图像(左)中进行细胞核识别(右)的结果。您可以看到其准确性与图3相仿,并且远高于图4。

3)基于形态特征的分割

如果想要基于图像的形态特征对图像进行分割,那么使用设置强度值和颜色阙值的常规方法很难实现高精度的分割。因此,就需要每次进行手动计数和测量。

相比之下,TruAI可以根据形态特征进行高效、准确的分割。神经网络从手动标记的图像中学习分割结果之后,其就可以将相同方法应用于其他数据集。例如,通过手动标记图像训练的神经网络可以对有丝分裂细胞计数,如下图所示。

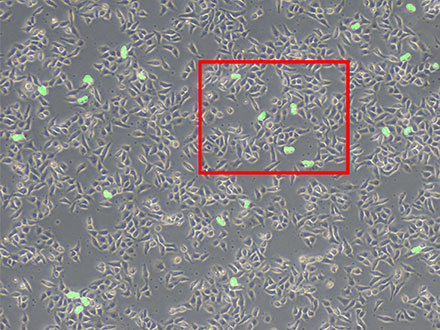

图6 |  图6中边框区域的高倍率图像(左) 图7 |

图6:利用TruAI(绿色)预测有丝分裂细胞。

图7:虽然可以看到很多细胞,但只能检测到分开的细胞(右)。

4) 组织标本分割

TruAI也可用于分割组织标本。例如,肾小球难以使用常规方法进行区分,但可以使用TruAI进行细分。

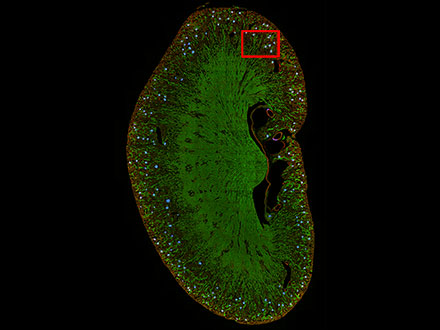

图8 |  图8中边框区域的高倍率图像(左) 图9 |

图8:利用TruAI(蓝色)预测小鼠肾脏切片上的肾小球位置。

图9:TruAI捕捉并识别肾小球特征(右)。

结论

传统分割方法可能难度很大,并且会损坏标本。我们具有深度学习功能的cellSens成像软件能够在诸如无标记成像或超低曝光量等对细胞损害最低的条件下实现准确有效的分割。该软件还让基于组织形态特征的组织标本分割更加轻松。

适于这类应用的产品

Maximum Compare Limit of 5 Items

Please adjust your selection to be no more than 5 items to compare at once

对不起,此内容在您的国家不适用。