Join Manoel and Kathy as they explain how to unleash the power of deep learning to tackle challenging image analysis tasks, such as detecting cells in brightfield images and cell classification tasks that are difficult for the human eye.

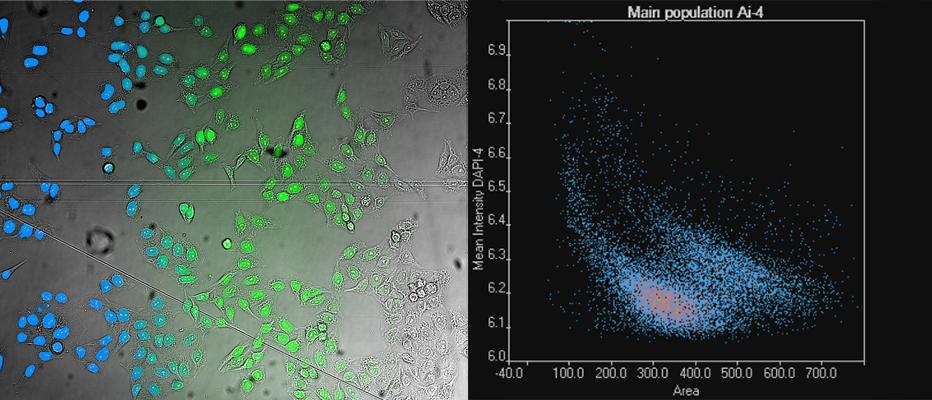

FAQWebinar FAQs | Deep LearningCan you do quantitative intensity measurements after deep learning algorithms are applied?The deep learning algorithms predict the position of the fluorescent signal but not the intensity. However, you can perform segmentation based on deep learning and then use the secondary channel to perform a quantitative intensity analysis (e.g., fluorescence). For example, if you’re going label-free and want to measure a protein expression and irradiate your specimen with very low power, then you can perform the segmentation in the brightfield image, then measure the fluorescence in the secondary channel. And if your intensity is low but has a constant background, you can perform a quantitative analysis—even if the signal is just a couple of counts above the camera noise. Can deep learning software be applied to stained histology slides (e.g., H&E)?Yes, there is a special neural network architecture in cellSens™ software for RGB images. This RGB network has an augmentation procedure that slightly modifies the contributions of the different colors, ensuring the neural network is robust to slight variations in RGB and the balance of colors. How many images does deep learning software need for training?The key parameter is the number of objects annotated rather than the number of images. In some cases, 20 to 30 objects would work, but then you can only use that neural network to analyze images with similar contrast. If you want to go beyond that to go label-free and analyze objects in difficult conditions, then you will typically need thousands of annotations. This high level of annotations can be achieved by applying an automated ground truth using fluorescence, for example. Are Olympus deep learning algorithms based on U-Net?Yes, they are inspired by U-Net. They are not exactly the same, but the overall structure is based on U-Net. What is the difference between deep neural networks and convolutional neural networks?Neural networks have an input and an output layer. A deep neural network has at least one intermediate layer between the input and the output layers (generally they have several intermediate layers). A convolutional neural network is a class of deep neural networks where the intermediate layers are convolved with each other. Convolution is a mathematical operation that works very well for imaging analysis tasks. For this reason, convolutional neural networks are used to analyze microscopy images. Deep learning is also used in other fields outside of image analysis. These applications do not require convolutional networks and use other types of networks instead. |