TruAI Based on Deep-Learning Technology for Robust, Label-Free Nucleus Detection and Segmentation in Microwell Plates

Introduction

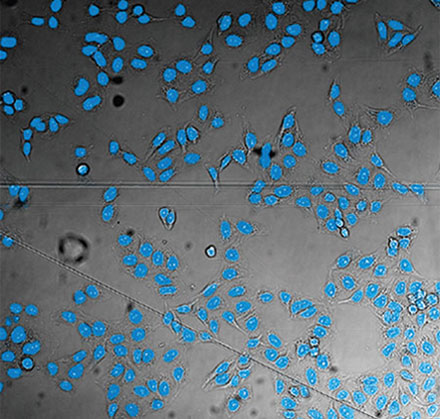

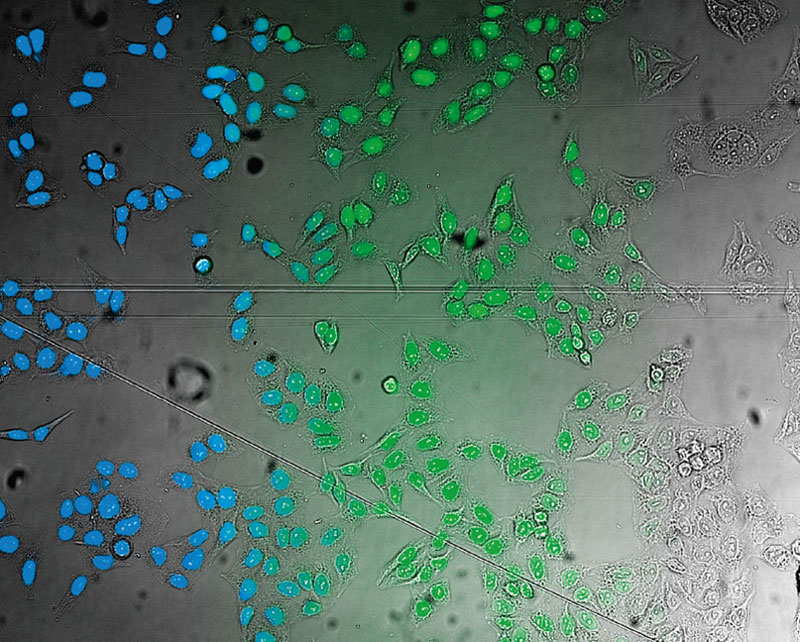

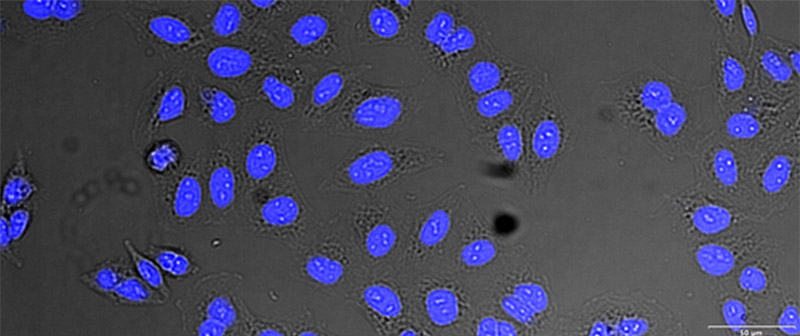

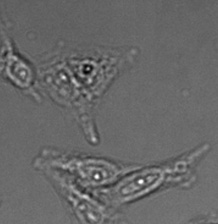

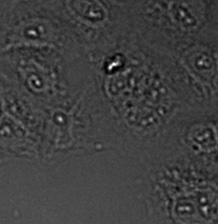

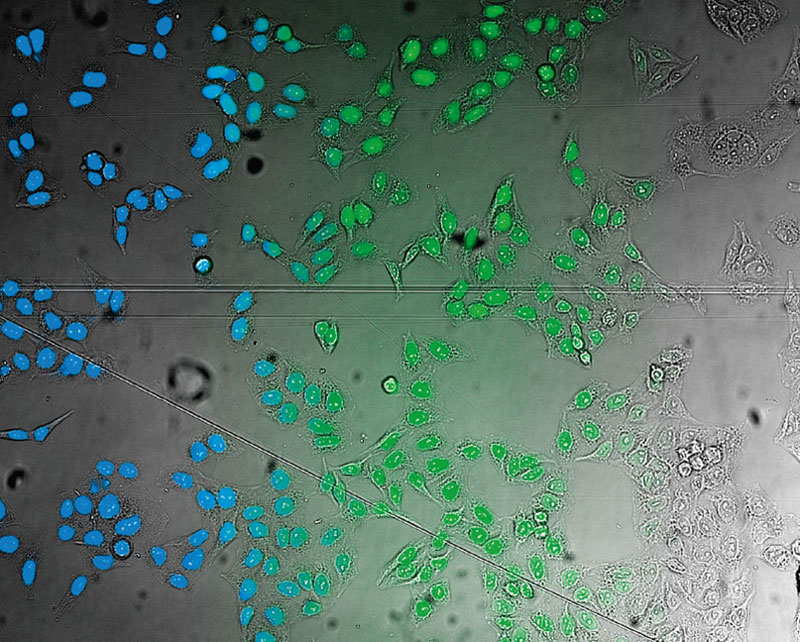

Labeling cells with chromatic and, in particular, fluorescent markers is invaluable for the observation and analysis of biological features and processes. Prior to the development of these labeling techniques, microscopic analysis of biological samples was performed through label-free observation. With the dramatic improvements in image analysis owing to machine-learning methods, label-free observation has recently seen a significant resurgence in importance. Deep-learning-based TruAI can provide new access to information encoded in transmitted-light images and has the potential to replace fluorescent markers used for structural staining of cells or compartments*1 (Figure 1).

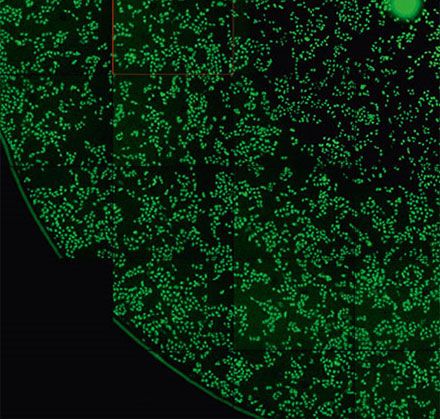

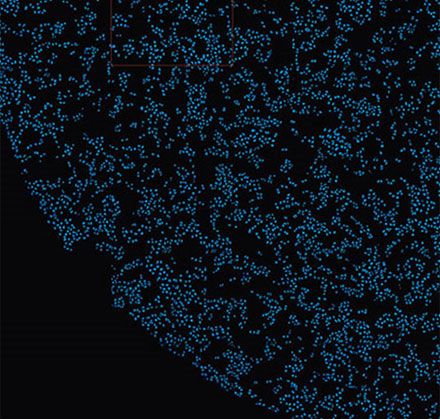

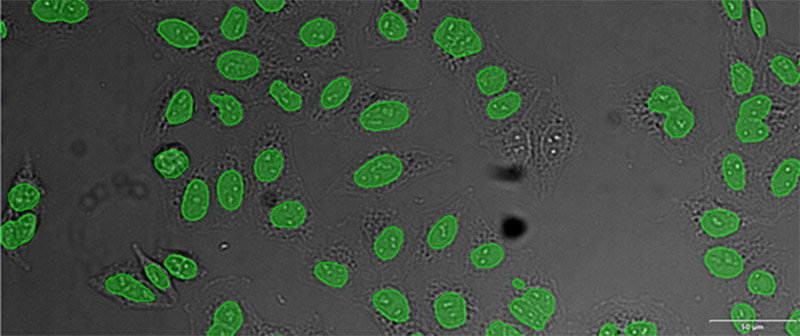

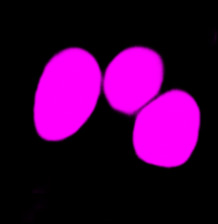

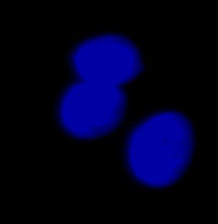

Figure 1

From left to right: TruAI prediction of nuclei positions (blue), green fluorescent protein (GFP) histone 2B labels showing nuclei (green), and raw brightfield transmission image (gray).

No label-free approach can completely replace fluorescence because information obtained from directly attaching labels to target molecules is still invaluable. However, gaining information about the sample with fewer labels has clear advantages:

- Reduced complexity in sample preparation

- Reduced phototoxicity

- Saving fluorescence channels for other markers

- Faster imaging

- Improved viability of living cells by avoiding stress from transfection or chemical markers

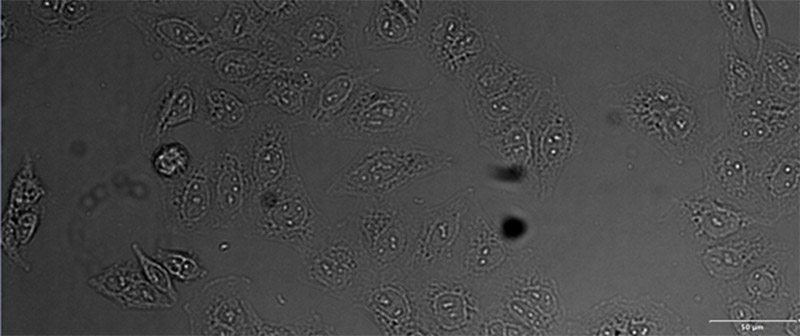

The limitations of label-free assays are due in large part to the lack of methods to robustly deal with the challenges inherent to transmitted-light image analysis. These constraints include:

- Low contrast, in particular, of brightfield images

- Compared to fluorescence microscopy, dust and other imperfections in the optical path negatively influence image quality

- Additional constraints of techniques to improve contrast, such as phase contrast or differential interference contrast (DIC)

- Higher level of background compared to fluorescence

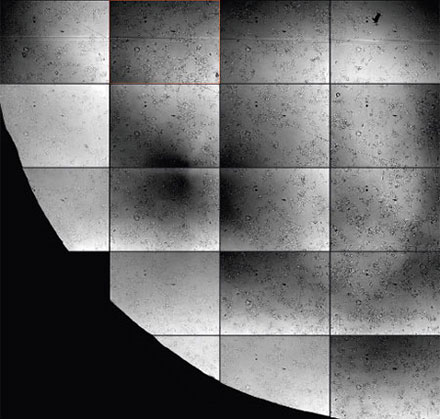

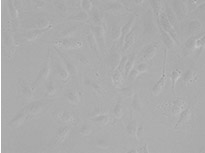

For live-cell imaging in microwell plates, it can be particularly challenging to acquire transmission images of sufficient quality for analysis because of the liquid meniscus of the buffer medium and other noise contributions (Figures 2*2 and 3). The challenges of microwell plates include:

- Phase contrast is often impossible

- DIC is only available in glass dishes

- Brightfield images are strongly shaded at the well borders

- Condensation artifacts may require removing the lid

- Particles in suspension increase background

|

|

Figure 2*2 | Figure 3 |

The Technology Behind TruAI

Transmission brightfield imaging is a natural approach for label-free analysis applications, but it also presents image analysis and segmentation challenges that have long been unsolved. To address these challenges, Olympus has integrated an image analysis approach TruAI into the cellSens software and scanR, based on deep convolutional neural networks. This kind of neural network architecture has recently been described as the most powerful object segmentation technology (Long et al. 2014: Fully Convolutional Networks for Semantic Segmentation). Neural networks of this kind feature an unrivaled adaptability to various challenging image analysis tasks, making it an optimal choice for the non trivial analysis of transmission brightfield images for label-free analysis.

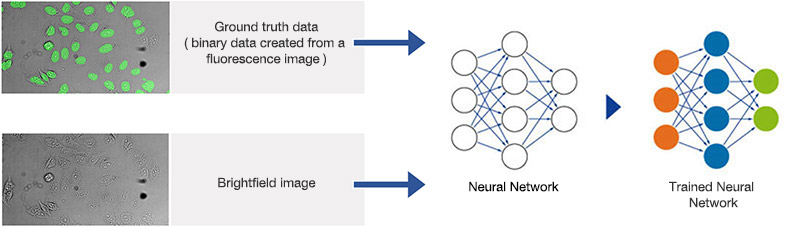

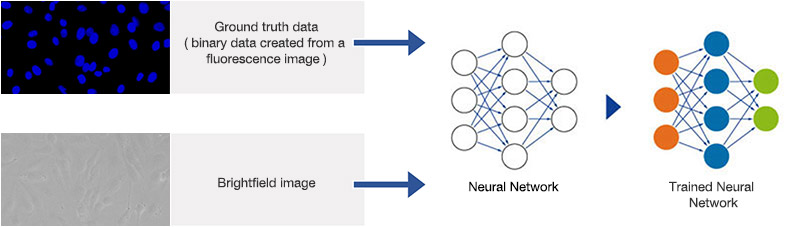

In a training phase, TruAI automatically learns how to predict the desired parameters, such as the positions and contours of cells or cell compartments. During the training phase, the network is fed with images and “ground truth” data (i.e., object masks where the objects of interest are annotated). Once the network has been trained, it can be applied to new images and predict the object masks with high precision.

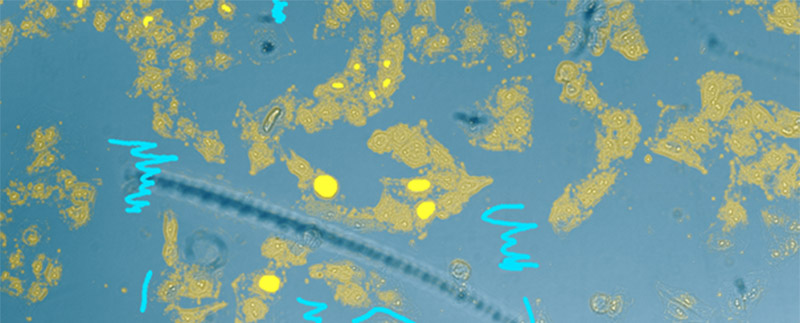

Typically, in machine learning, the annotations (for example, the boundaries of cells) are provided by human experts. This can be a tedious and time-consuming step because neural networks require large amounts of training data to fully exploit their potential (Figure 4). So, it is essential to have a convenient and easy-to-use annotation tool.

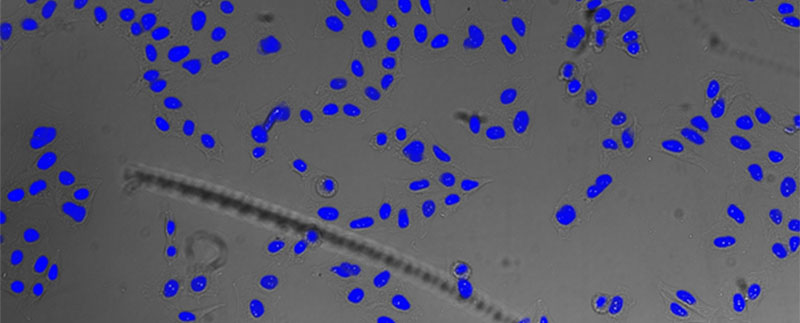

On the other hand, as a much simpler way, the microscope automatically generates the ground truth required for training the neural network by acquiring reference images during the training phase. For example, to teach the neural network the robust detection and segmentation of nuclei in brightfield images under difficult conditions, the nuclei can be labeled with a fluorescent marker. The microscope can automatically acquire a large number of image pairs (brightfield and fluorescence). On the fluorescence channel, the ground truth can easily be detected by automated thresholding (Figures 6 and 7). The objects are the ground truth to train the neural network, and the resulting network enables the researcher to correctly find the nuclei only using the brightfield images (Figure 5*2).

Figure 4

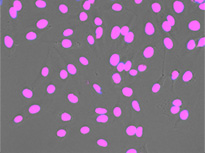

Nucleus detection result from transmission image by general machine learning. Trained by manually marking the nucleus yellow and the background light blue. The detection accuracy is low.

Figure 5*2

Nucleus detection result from transmission image by TruAI. Highly accurate detection is possible.

Since this approach to ground truth generation requires little human interaction, large amounts of training image pairs can be acquired in a short time. This makes it possible for the neural network to adapt to all kinds of variations and distortions during the training, which results in a neural network model that is robust against these challenging issues.

| |

Figure 6 | Figure 7 |

Label-Free Segmentation Training by TruAI

To demonstrate a typical use case, a whole 96-well plate with variations in buffer filling level, condensation effect, and meniscus-induced imaging artifacts was imaged with the following parameters:

- UPLSAPO objective (10X magnification, NA = 0.6)

- Adherent HeLa cells in liquid buffer (fixed)

- GFP channel: histone 2B GFP as a marker for the nucleus (ground truth)

- Brightfield channel: three Z-slices with a 6 μm step size (to include defocused images in the training

The ground truth for the neural network training is generated by automated segmentation of the fluorescence images using conventional methods. Including slightly defocused images in the example data set during training allows the neural network to better account for small focus variations later. The neural network is trained with pairs of ground truth and brightfield images, as depicted in Figure 8*2. Five wells with 40 positions each are used as training data. The training phase took about 160 minutes using an NVIDIA GTX 1080Ti graphics card (GPU).

Figure 8*2

Schematic showing the training process of the neural network.

Label-Free Nucleus Detection and Segmentation

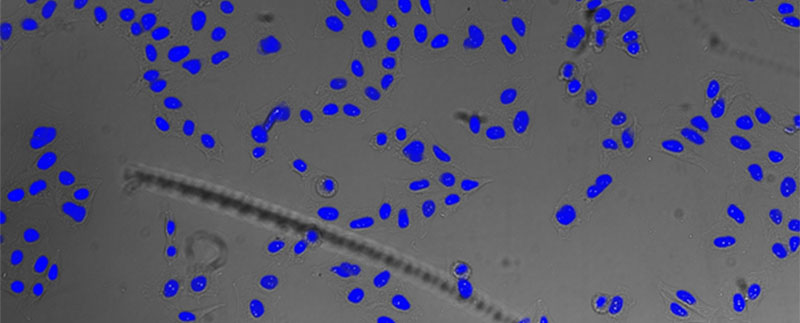

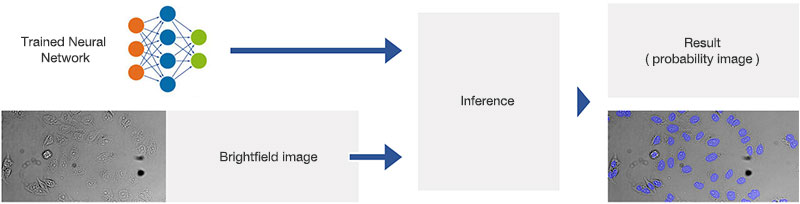

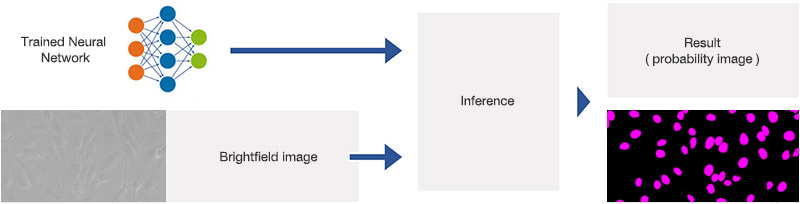

During the detection phase, the learned neural network model is applied to brightfield images, as depicted in Figure 9*2. For each pixel, it predicts whether it belongs to a nucleus or not. The result is a probability image, which can be visualized as shown in Figures 10*2 and 11*2 by color-coding the probability and generating an overlay image.

Figure 9*2

Schematic showing the application (inference) of the trained neural network.

The images in Figures 10*2 and 11*2 show that the neural network, which learned to predict cellular nuclei from brightfield images, finds the nuclei at the exact positions they appear in the brightfield image, clearly demonstrating the value of the AI-based approach:

- High-precision detection and segmentation of nuclei

- Optimal for cell counting and geometric measurements, like area or shape

- Less than 1-second processing time per position (on NVIDIA GTX 1080 Ti GPU)

| |

Figure 10*2 | Figure 11*2 |

Validation of the Results

Predictions by TruAI can be very precise and robust, but it is essential to validate the predictions carefully to help ensure that no artifacts or other errors are produced. In this sense, it is similar to a classical image analysis pipeline, but errors are less easy to predict without careful validation since they depend on the data used for training.

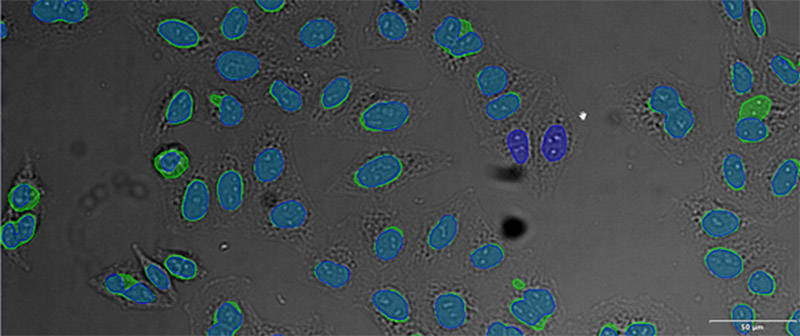

Olympus cellSens imaging software is well suited for systematic validation of the TruAI results. Figure 12*2 compares the software results to the fluorescence-based analysis on a single image. It shows that Olympus’ TruAI results correspond well with the fluorescence results.

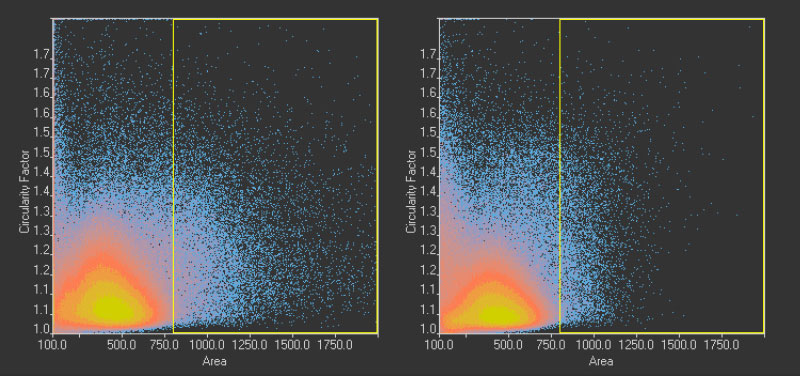

To validate in more detail, Olympus scanR software can be used. For example, the overall cell counts of the wells can easily be compared in the scanR software (Figure 13).

However, the total cell count using TruAI is around 3% larger than the cell counts based on fluorescence imaging (1.13 million cells versus 1.10 million nuclei).

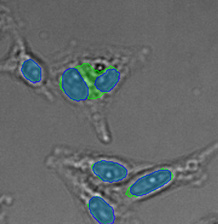

One reason for this discrepancy was that the TruAI was able to detect nuclei that did not produce enough GFP signal to be detected with fluorescence. However, another reason was identified by checking the larger objects exceeding ~340 µm2 in size.

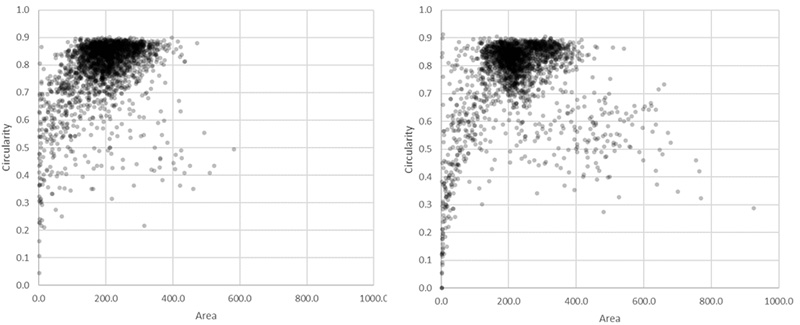

These overall values revealed 22,000 (2%) unusually large objects (>340 µm2) in the fluorescence plot compared to 7,000 (0.6%) in the TruAI results.

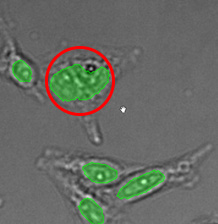

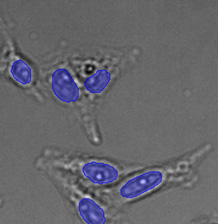

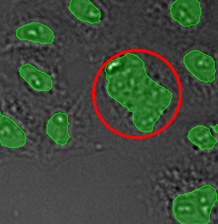

Figure 14*2 shows a selection of unusually large objects in comparison, indicating the better separation of nuclei in close contact by TruAI.

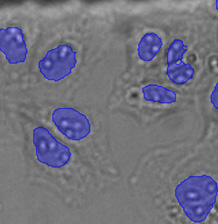

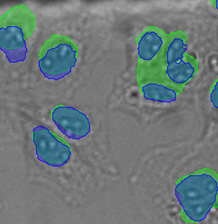

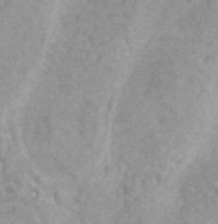

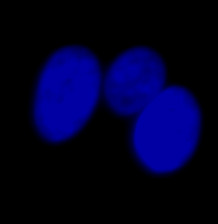

(A)

(B)

(C)

(D)

Figure 12*2

Example image of the validation data set. (A) GFP nuclear labels, (B) brightfield image, and (C) TruAI prediction of nuclei positions from brightfield image, (D) overlay of GFP label (green) and TruAI result (blue)

|

|

Figure 13

scanR system: Comparison of cell counts of the reference method, counted on the GFP channel with a conventional approach (left) and TruAI, counted on the brightfield channel using the neural network (right). Wells 1–5 have been used for the training and must not be considered for validation.

Figure 14*2

Scatter plot showing circularity versus area distribution of the 1.10 million nuclei detected in the GFP channel (left) and the 1.13 million nuclei detected in the brightfield channel by the TruAI (right). The yellow rectangle indicates unusually large objects.

(A) | (B) | (C) | (D) |

|  |  |  |

|  |  |  |

|  |  |  |

Figure 15*2

Three examples of unusually large objects of the whole validation data set. (A) GFP nuclear labels, (B) brightfield image, and (C) TruAI detection of nuclei positions from the brightfield image.

Conclusions

The TruAI extension for Olympus’ cellSens software can reliably derive nuclei positions and masks in microwells solely from brightfield transmission images. The software can achieve this after a brief training stage. After automated object detection, the data could easily be edited for training. The network generated for analysis on transmission images can compete or even outperform the classical approach with a fluorescence label.

The use of Olympus’ TruAI offers significant benefits to many live-cell analysis workflows. Aside from the improved accuracy, using brightfield images also avoids the need for using genetic modifications or nucleus markers. Not only does this save time on sample preparation, it also saves the fluorescence channel for other markers. Furthermore, the shorter exposure times for brightfield imaging mean reduced phototoxicity and time savings on imaging

Author

Dr. Matthias Genenger,

Dr. Mike Woerdemann

Product Manager

Olympus Soft Imaging Solutions

GmbH

Münster, Germany

*1 (Christiansen et al. In Silico Labeling: Predicting Fluorescent Labels in Unlabeled Images, Cell, 2018)

Introduction

Labeling cells with chromatic and, in particular, fluorescent markers is invaluable for the observation and analysis of biological features and processes. Prior to the development of these labeling techniques, microscopic analysis of biological samples was performed through label-free observation. With the dramatic improvements in image analysis owing to machine-learning methods, label-free observation has recently seen a significant resurgence in importance. Deep-learning-based TruAI can provide new access to information encoded in transmitted-light images and has the potential to replace fluorescent markers used for structural staining of cells or compartments*1 (Figure 1).

Figure 1

From left to right: TruAI prediction of nuclei positions (blue), green fluorescent protein (GFP) histone 2B labels showing nuclei (green), and raw brightfield transmission image (gray).

No label-free approach can completely replace fluorescence because information obtained from directly attaching labels to target molecules is still invaluable. However, gaining information about the sample with fewer labels has clear advantages:

- Reduced complexity in sample preparation

- Reduced phototoxicity

- Saving fluorescence channels for other markers

- Faster imaging

- Improved viability of living cells by avoiding stress from transfection or chemical markers

The limitations of label-free assays are due in large part to the lack of methods to robustly deal with the challenges inherent to transmitted-light image analysis. These constraints include:

- Low contrast, in particular, of brightfield images

- Compared to fluorescence microscopy, dust and other imperfections in the optical path negatively influence image quality

- Additional constraints of techniques to improve contrast, such as phase contrast or differential interference contrast (DIC)

- Higher level of background compared to fluorescence

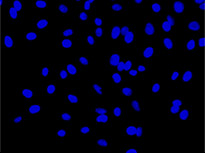

|

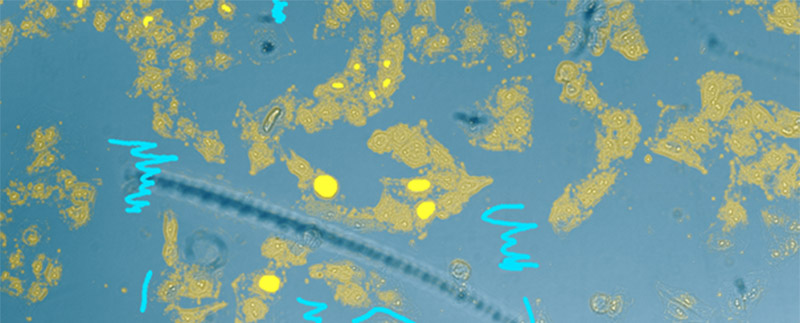

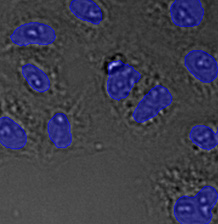

Figure 2

Detailed view showing the strong background and inhomogeneity that can occur in brightfield transmission imaging. Note the out-of-focus contributions from unwanted particles

The Technology Behind TruAI

Transmission brightfield imaging is a natural approach for label-free analysis applications, but it also presents image analysis and segmentation challenges that have long been unsolved. To address these challenges, Olympus has integrated an image analysis approach TruAI into the cellSens software, based on deep convolutional neural networks. This kind of neural network architecture has recently been described as the most powerful object segmentation technology (Long et al. 2014: Fully Convolutional Networks for Semantic Segmentation). Neural networks of this kind feature an unrivaled adaptability to various challenging image analysis tasks, making it an optimal choice for the non trivial analysis of transmission brightfield images for label-free analysis.

In a training phase, TruAI automatically learns how to predict the desired parameters, such as the positions and contours of cells or cell compartments. During the training phase, the network is fed with images and “ground truth” data (i.e., object masks where the objects of interest are annotated). Once the network has been trained, it can be applied to new images and predict the object masks with high precision.

Typically, in machine learning, the annotations (for example, the boundaries of cells) are provided by human experts. This can be a tedious and time-consuming step because neural networks require large amounts of training data to fully exploit their potential (Figure 3). So, it is essential to have a convenient and easy-to-use annotation tool.

As much simpler approach, TruAI can be used. For example, to teach the neural network the robust detection and segmentation of nuclei in brightfield images under difficult conditions, the nuclei can be labeled with a fluorescent marker. The microscope can automatically acquire a large number of image pairs (brightfield and fluorescence). On the fluorescence channel, the ground truth can easily be detected by automated thresholding. The objects are the ground truth to train the neural network, and the resulting network enables the researcher to correctly find the nuclei only using the brightfield images (Figure 4*2).

Figure 3

Nucleus detection result from transmission image by general machine learning. Trained by manually marking the nucleus yellow and the background light blue. The detection accuracy is low.

Figure 4*2

Nucleus detection result from transmission image by TruAI. Highly accurate detection is possible.

Since this approach to ground truth generation requires little human interaction, large amounts of training image pairs can be acquired in a short time. This makes it possible for the neural network to adapt to all kinds of variations and distortions during the training, which results in a neural network model that is robust against these challenging issues.

Label-Free Segmentation Training

To demonstrate a typical use case, a whole 96-well plate with variations in buffer filling level, condensation effect, and meniscus-induced imaging artifacts was imaged with the following parameters:

- LUCPLFLN20XPH objective (NA = 0.45)

- Adherent HeLa cells in liquid buffer (fixed)

- Fluorescence channel: DAPI as a marker for the nucleus (ground truth)

- Transmission channel: brightfield

The ground truth for the neural network training is generated by automated segmentation of the fluorescence images using conventional methods. Including slightly defocused images in the example data set during training allows the neural network to better account for small focus variations later. The neural network is trained with pairs of ground truth and brightfield images, as depicted in Figure 5*2. The training phase took about 180 minutes using an NVIDIA GTX 1060 graphics card (GPU).

Figure 5*2

Schematic showing the training process of the neural network.

Label-Free Nucleus Detection and Segmentation

During the detection phase, the learned neural network model is applied to brightfield images, as depicted in Figure 6*2. For each pixel, it predicts whether it belongs to a nucleus or not. The result is a probability image, by color-coding the probability and generating an overlay image.

Figure 6*2

Schematic showing the application (inference) of the trained neural network.

The neural network, which learned to predict cellular nuclei from brightfield images, finds the nuclei at the exact positions they appear in the brightfield image, clearly demonstrating the value of the AI-based approach:

- High-precision detection and segmentation of nuclei

- Optimal for cell counting and geometric measurements, like area or shape

- Less than 1-second processing time per position (on NVIDIA GTX 1060 GPU)

Validation of the Results

Deep-learning predictions can be very precise and robust, but it is essential to validate the predictions carefully to help ensure that no artifacts or other errors are produced. In this sense, it is similar to a classical image analysis pipeline, but errors are less easy to predict without careful validation since they depend on the data used for training.

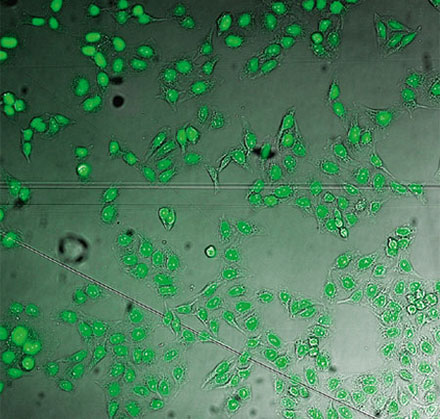

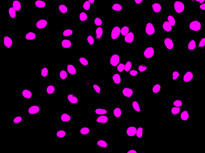

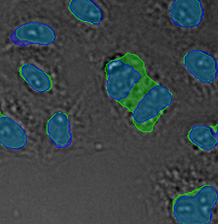

Olympus cellSens imaging software is well suited for systematic validation of the AI results. Figure 7 compares the software results to the fluorescence-based analysis on a single image. It shows that Olympus’ AI results correspond well with the fluorescence results.

However, the total cell count using the deep-learning approach is around 3% larger than the cell counts based on fluorescence imaging (2523 cells versus 2459 million nuclei).

One reason for this discrepancy was that the TruAI was able to detect nuclei that did not produce enough GFP signal to be detected with fluorescence. However, another reason was identified by checking the larger objects exceeding ~400 µm2 in size.

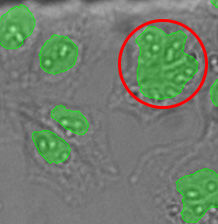

These overall values revealed 126 (5.1%) unusually large objects (>400 µm2) in the fluorescence plot compared to 24 (0.95%) in the TruAI results.

Figure 8*2 shows a selection of unusually large objects in comparison, indicating the better separation of nuclei in close contact by the TruAI.

|

|

|

|

Figure 7

Example image of the validation data set. (A) GFP nuclear labels, (B) brightfield image, and (C) AI prediction of nuclei positions from brightfield image, (D) overlay of GFP label (green) and AI result (blue)

Figure 8*2

Scatter plot showing circularity versus area distribution of the 1.10 million nuclei detected in the GFP channel (left) and the 1.13 million nuclei detected in the brightfield channel by the AI (right). The yellow rectangle indicates unusually large objects.

(A) | (B) | (C) | |

|  |  | |

|  |  | |

|  |  | |

| Figure 9 Three examples of unusually large objects of the whole validation data set. (A) GFP nuclear labels, (B) brightfield image, and (C) the TruAI detection of nuclei positions from the brightfield image. | |||

Conclusions

The deep learning extension for Olympus’ cellSens software can reliably derive nuclei positions and masks in microwells solely from brightfield transmission images. The software can achieve this after a brief training stage. After automated object detection, the data could easily be edited for training. The network generated for analysis on transmission images can compete or even outperform the classical approach with a fluorescence label.

The use of Olympus’ TruAI offers significant benefits to many live-cell analysis workflows. Aside from the improved accuracy, using brightfield images also avoids the need for using genetic modifications or nucleus markers. Not only does this save time on sample preparation, it also saves the fluorescence channel for other markers. Furthermore, the shorter exposure times for brightfield imaging mean reduced phototoxicity and time savings on imaging

Author

Dr. Matthias Genenger,

Dr. Mike Woerdemann

Product Manager

Olympus Soft Imaging Solutions

GmbH

Münster, Germany

*1 (Christiansen et al. In Silico Labeling: Predicting Fluorescent Labels in Unlabeled Images, Cell, 2018)

*2 Although it became one of the most important cell lines in medical research, it’s imperative that we recognize Henrietta Lacks’ contribution to science happened without her consent. This injustice, while leading to key discoveries in immunology, infectious disease, and cancer, also raised important conversations about privacy, ethics, and consent in medicine.

To learn more about the life of Henrietta Lacks and her contribution to modern medicine, click here.

http://henriettalacksfoundation.org/

Sorry, this page is not

available in your country.